Step into the future of sound—vocal processing techniques 2025 are redefining how we capture, shape, and deliver the human voice in music, film, and virtual spaces. From AI-powered tuning to immersive spatial rendering, the evolution is faster than ever.

vocal processing techniques 2025: The Evolution of Voice in Modern Production

The year 2025 marks a turning point in audio engineering, where vocal processing techniques 2025 are no longer just about pitch correction or reverb. They’ve evolved into intelligent, adaptive systems that respond to emotion, environment, and intent. The fusion of machine learning, real-time processing, and immersive audio formats has elevated vocal production from post-production cleanup to a central creative force.

From Analog to AI: A Historical Shift

Historically, vocal processing relied on analog compressors, EQs, and tape delays. Engineers spent hours manually adjusting levels and timing. Fast forward to 2025, and AI-driven tools can analyze vocal performances in real time, identifying breath patterns, vibrato consistency, and emotional tone. Tools like iZotope’s RX 12 and Celemony’s Melodyne 6 now integrate neural networks to separate vocals from backing tracks with near-perfect accuracy.

- 1970s–1990s: Analog compressors and hardware reverbs dominated.

- 2000s: Digital workstations introduced pitch correction (Auto-Tune).

- 2025: AI models predict vocal intent and auto-suggest processing chains.

The Role of Machine Learning in Vocal Clarity

Machine learning algorithms now power noise reduction, de-essing, and dynamic control with unprecedented precision. For example, iZotope RX uses deep neural networks to distinguish between vocal sibilance and essential high-frequency content, preserving clarity without harshness. These models are trained on millions of vocal samples, enabling them to generalize across genres and languages.

“AI isn’t replacing engineers—it’s giving them superpowers.” — Dr. Lena Torres, Audio AI Researcher at Berklee College of Music

AI-Powered Vocal Tuning and Real-Time Pitch Correction

One of the most visible advancements in vocal processing techniques 2025 is the leap in real-time pitch correction powered by artificial intelligence. Unlike earlier versions of Auto-Tune that required post-processing, modern systems like Antares’ Auto-Key Pro and Celemony’s Melodyne Assistant can tune vocals on the fly during live performances or recording sessions.

vocal processing techniques 2025 – Vocal processing techniques 2025 menjadi aspek penting yang dibahas di sini.

How AI Enhances Natural Vocal Expression

Traditional pitch correction often resulted in the infamous “robotic” effect. In 2025, AI models are trained to preserve natural vibrato, microtonal inflections, and emotional nuances. By analyzing the singer’s style and genre, the software applies subtle corrections that maintain authenticity. For instance, a jazz vocalist might retain slight pitch bends, while a pop artist receives tighter correction.

- Style-aware tuning: AI detects genre and adapts correction algorithms.

- Emotion-preserving algorithms: Vibrato and dynamics are retained.

- Real-time feedback: Visualizers show pitch deviation during recording.

Integration with DAWs and Live Performance Systems

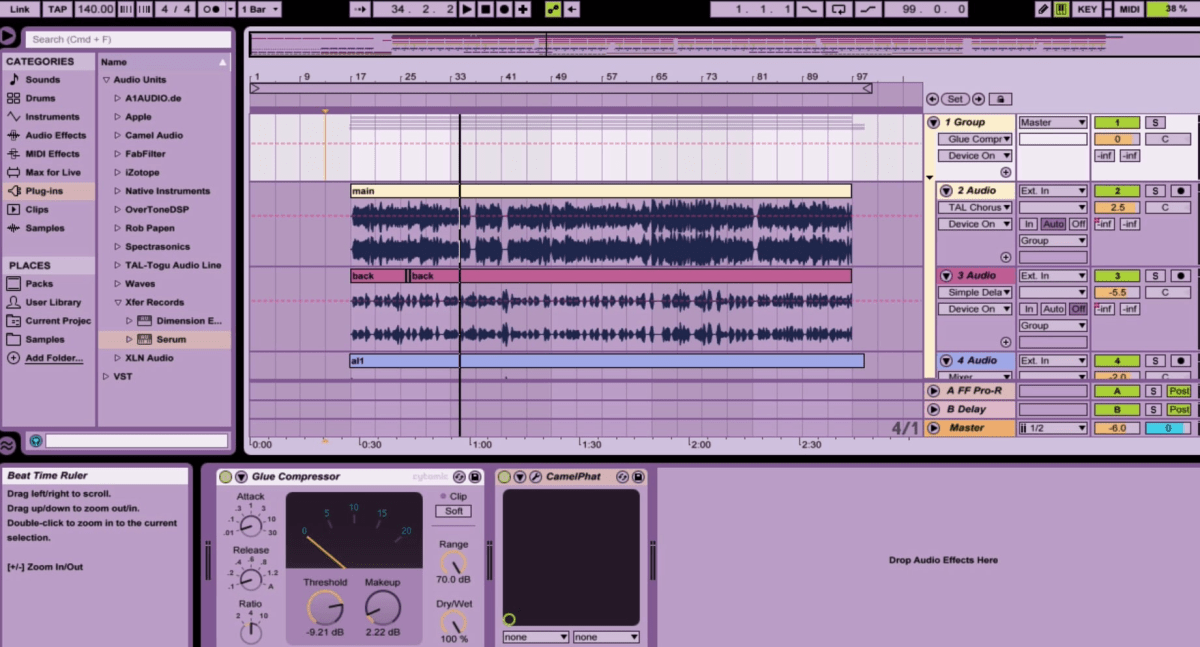

Modern DAWs like Ableton Live 12 and Cubase 14 now feature built-in AI vocal assistants that suggest tuning curves based on the musical key and tempo. In live settings, systems like Waves Tune Real-Time allow performers to sing with confidence, knowing their pitch is being subtly corrected without latency.

“We’re moving from correction to enhancement—AI helps singers sound like their best selves, not someone else.” — Mark Linett, Grammy-winning Engineer

Advanced De-Essing and Dynamic Control in 2025

De-essing has long been a critical but often overlooked aspect of vocal processing. In 2025, vocal processing techniques 2025 have transformed de-essers from simple frequency cutters to intelligent dynamic processors that adapt to vocal timbre and delivery.

Frequency-Selective De-Essing with AI Detection

Traditional de-essers targeted a fixed frequency range (usually 5–8 kHz), risking the loss of vocal presence. New AI-powered tools like Waves NS1 and FabFilter Pro-DS use spectral analysis to identify sibilance across multiple bands. They can distinguish between harsh ‘s’ sounds and essential consonants like ‘sh’ or ‘ch’, applying surgical attenuation only where needed.

- Multi-band sibilance detection reduces over-processing.

- Adaptive thresholding adjusts to vocal dynamics.

- Machine learning models learn from user preferences over time.

Dynamic Range Management with Smart Compression

Compression in 2025 is no longer about squashing peaks—it’s about shaping vocal dynamics intelligently. Plugins like Sonnox Oxford Inflator 2 and Slate Digital’s Virtual Mix Rack use AI to analyze vocal phrases and apply gain reduction only during specific syllables or breaths. This preserves the natural ebb and flow of a performance while ensuring consistency across tracks.

vocal processing techniques 2025 – Vocal processing techniques 2025 menjadi aspek penting yang dibahas di sini.

“Smart compression doesn’t just control volume—it enhances expression.” — Sarah Lipstate, Sound Designer and Composer

Spatial Audio and 3D Vocal Placement Techniques

With the rise of immersive audio formats like Dolby Atmos and Sony 360 Reality Audio, vocal processing techniques 2025 now include advanced spatialization tools. Engineers can place vocals in a 3D soundfield, creating depth, movement, and emotional impact.

Binaural Rendering for VR and AR Experiences

In virtual reality (VR) and augmented reality (AR) applications, binaural rendering allows vocals to appear as if they’re coming from specific directions. Tools like DearVR PRO and Facebook’s Spatial Workstation use head-related transfer functions (HRTFs) to simulate realistic spatial cues. This is crucial for storytelling in VR concerts or interactive audio dramas.

- HRTF-based processing mimics human hearing.

- Real-time head tracking adjusts vocal position as the user moves.

- Integration with game engines like Unity and Unreal.

Object-Based Mixing in Dolby Atmos

Dolby Atmos allows individual vocal tracks to be treated as audio objects with X, Y, Z coordinates. In 2025, vocal processing techniques 2025 leverage this by automating object placement based on lyrical content or emotional intensity. For example, a whispered line might be placed behind the listener, while a powerful chorus rises above.

“Spatial vocals aren’t a gimmick—they’re a new dimension of storytelling.” — Chris Bell, Immersive Audio Producer

Vocal Synthesis and AI-Generated Singing Voices

One of the most controversial yet groundbreaking aspects of vocal processing techniques 2025 is the rise of AI-generated singing voices. Platforms like Vocaloid 7 and Synthesizer V Studio Pro use deep learning to create realistic singing voices from text input.

How AI Models Learn to Sing Naturally

These systems are trained on vast datasets of professional vocal performances, capturing nuances like breath control, phrasing, and emotional delivery. In 2025, AI singers can even mimic specific artists (with proper licensing), opening new possibilities for legacy projects or virtual performers.

vocal processing techniques 2025 – Vocal processing techniques 2025 menjadi aspek penting yang dibahas di sini.

- Neural vocoders generate lifelike timbres.

- Phoneme transition modeling ensures smooth syllable flow.

- Emotion tags allow users to specify ‘happy,’ ‘sad,’ or ‘angry’ delivery.

Ethical and Creative Implications

While AI-generated vocals offer creative freedom, they raise ethical questions about voice ownership and artist consent. In 2025, industry standards are emerging to ensure transparency—requiring disclosure when AI voices are used and protecting original artists’ rights. Organizations like the Audio Engineering Society are leading discussions on responsible AI use in music.

“We must balance innovation with integrity—AI should empower artists, not replace them.” — Dr. Amara Patel, Ethics in AI Researcher

Real-Time Vocal Processing for Live Streaming and Podcasting

With the explosion of live streaming, podcasting, and remote collaboration, vocal processing techniques 2025 have become essential for content creators. Real-time processing ensures professional-quality audio even in home studios or mobile setups.

Low-Latency Plugins and Hardware Integration

Modern audio interfaces from brands like Universal Audio and Focusrite now include onboard DSP that runs vocal processing plugins with near-zero latency. This allows streamers to apply compression, EQ, and reverb in real time without taxing their computer’s CPU.

- UA’s Arrow interface runs UAD plugins with sub-2ms latency.

- Waves’ Nx technology provides virtual monitoring for headphones.

- Integration with OBS and Streamlabs for seamless broadcasting.

Automated Noise Suppression and Room Correction

Tools like NVIDIA RTX Voice and Krisp use AI to eliminate background noise—keyboard clicks, AC hum, or street noise—without affecting vocal clarity. In 2025, these systems also perform real-time room correction, analyzing the acoustic environment and applying EQ to compensate for poor room acoustics.

“Every creator deserves studio-quality sound, no matter their setup.” — Jordan Rudess, Keyboardist and Tech Innovator

Future-Proofing Your Vocal Workflow: Tools and Best Practices

As vocal processing techniques 2025 continue to evolve, it’s crucial for producers, engineers, and artists to future-proof their workflows. This means adopting flexible, scalable tools and staying informed about emerging trends.

vocal processing techniques 2025 – Vocal processing techniques 2025 menjadi aspek penting yang dibahas di sini.

Choosing the Right Plugins and DAWs

Not all plugins are created equal. In 2025, prioritize tools that offer AI integration, cloud collaboration, and cross-platform compatibility. DAWs like Studio One 7 and Ableton Live 12 lead the pack with intuitive AI assistants and modular routing options.

- Look for AI-powered assistants and adaptive processing.

- Ensure compatibility with spatial audio formats.

- Choose plugins with regular updates and strong developer support.

Building a Scalable Vocal Processing Chain

A modern vocal chain in 2025 might include: AI de-noise → smart de-esser → dynamic EQ → AI pitch correction → spatial reverb → mastering limiter. The key is modularity—being able to swap or reorder elements based on the project. Templates and presets save time, but manual tweaking ensures artistic control.

“The best vocal chain is the one that serves the song, not the technology.” — Manny Marroquin, Mix Engineer

What are the most important vocal processing techniques 2025 for beginners?

Beginners should focus on mastering AI-powered pitch correction, smart de-essing, and dynamic compression. Tools like Melodyne Assistant and Waves CLA-76 offer intuitive interfaces and real-time feedback, making it easier to learn proper vocal shaping without over-processing.

Can AI replace human vocal engineers in 2025?

vocal processing techniques 2025 – Vocal processing techniques 2025 menjadi aspek penting yang dibahas di sini.

No—AI enhances human creativity but cannot replace the artistic judgment of a skilled engineer. While AI handles repetitive tasks like noise reduction or tuning, the final decisions on tone, emotion, and balance still require human ears and experience.

How do I use spatial audio in vocal production?

Start by using binaural plugins like DearVR PRO or Dolby Atmos Renderer. Place your lead vocal in the center, then experiment with backing vocals or ad-libs in the surround field. Use automation to create movement, such as a whisper that circles the listener.

Are AI-generated singing voices legal to use?

Yes, but with caveats. Using AI voices based on real artists requires proper licensing. Platforms like Vocaloid provide royalty-free voices, but cloning a celebrity’s voice without permission is illegal in most jurisdictions.

vocal processing techniques 2025 – Vocal processing techniques 2025 menjadi aspek penting yang dibahas di sini.

What hardware is best for real-time vocal processing?

Audio interfaces with onboard DSP—like Universal Audio’s Apollo series or Focusrite’s Red 8Pre—are ideal. They run plugins with zero latency, allowing real-time monitoring with effects. Pair them with high-quality condenser mics and acoustic treatment for best results.

The landscape of vocal processing techniques 2025 is more dynamic and intelligent than ever before. From AI-driven tuning and noise suppression to immersive spatial audio and synthetic voices, these innovations are reshaping how we create and experience vocal music. While technology advances rapidly, the human voice remains the heart of expression. The best results come not from relying solely on algorithms, but from using these tools to enhance authenticity, emotion, and connection. As we move forward, the fusion of art and science in vocal processing will continue to push the boundaries of what’s possible in sound.

Recommended for you 👇

Further Reading: